This work was originally presented at the Applied Systems Engineering Workshop 2024, organized by Universidad Europea de Madrid.

By Daniel Villafañe Delgado

AI-Driven Functional Hazard Assessment in aviation safety | Artificial Intelligence for Systems Engineering (AI4SE)

Table of Contents

- Could an Artificial Intelligence (AI) assist the system safety engineering?

- CORSARIO project as a use case of system safety engineering enhanced with Artificial Intelligence

- Understanding the Functional Hazard Assessment (FHA) Process in Aviation Safety

- Leveraging Artificial Intelligence (AI) for Automated Functional Hazard Assessment (FHA)

- Use case of AI-powered Functional Hazard Assessment generation process

- Deploying Artificial Intelligence for Functional Hazard Assessment in Systems Engineering

- How to master generative Artificial Intelligence with advanced techniques for Systems Engineering

Could an Artificial Intelligence (AI) assist the system safety engineering?

Artificial Intelligence (AI) has shown extraordinary capabilities in data analysis, text processing, generation, and image recognition. At Anzen, we explore how AI can revolutionize systems safety engineering, in this article we talk about the integration of AI in the Functional Hazard Assessment (FHA) for aviation safety.

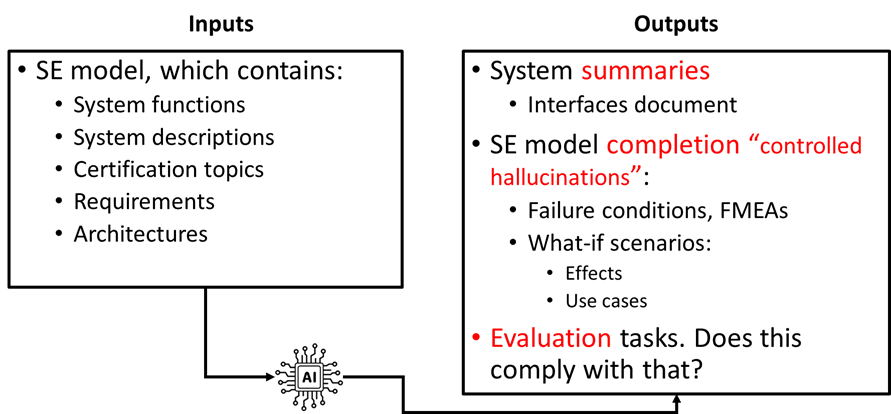

We foresee two main domains of application of AI:

- One is AI on aviation products and how it will affect the current certification processes, for instance, aircraft autopilot using AI or pilot physiological monitoring based on AI. These are currently being discussed by the aviation authorities and we don’t expect to see them in the market until 2030.

- The other is AI on tools. We, as systems engineers, generate a lot of documentation. Could you imagine getting rid of writing documents (e.g. Functional Hazard Assessment) just by putting some prior information into the AI?

This study focusses on the second use-case. However, someone might think: Under no circumstances should someone trust on AI generated safety-related content, especially aviation safety.

Well, so did we until we tried it.

This is why we started this project. We wanted to find out how good is AI doing Safety Engineering, what inputs it needs and how the results can be improved.

But first, let’s talk about Corsario project.

CORSARIO project as a use case of system safety engineering enhanced with Artificial Intelligence

The objective of Corsario is to develop an ubiquitous, high bandwidth communication system for helicopters.

- The new system shall ensure connectivity at any time during a typical mission, not constrained to the availability and physical limitations of ground-based communications infrastructure. Because of this, the system will rely on the communication with Geostationary Satellites, visible from nearly any location on Earth.

- The new system shall provide high bandwidth compatible with typical use cases such as transmission and reception of large volumes of data both asynchronously and in real-time (phone call and video). Because of this, the system will implement a data link in Ka band.

Anzen’s tasks in Corsario are:

- To provide safety and reliability analysis.

- To set up a model-based systems engineering framework.

One of the innovation objectives of Corsario is the integration of AI for safety engineering.

But what problem is Corsario trying to solve?

Well, there are 3 problems to solve. Current antennas being used on helicopters don’t have the required bandwidth for some applications, as real time data broadcasting. Besides, these antennas are quite big and heavy because they use mechanical steering systems. And, in addition, if the rotation of the blades may cause interferences with the communication.

For this reason, Corsario will develop a new kind of antenna, providing higher bandwidth, a flat electronically steerable antenna without mechanical parts, and with an algorithm that avoids signal blockage.

This new antenna also respects the helicopter’s shape decreasing drag generation and therefore fuel consumption.

More information about Corsario (English version)

CORSARIO Project: The satellite communications system to be used in helicopters of the future

More information about Corsario (Spanish version)

Oesía, Airbus y Anzen lanzan Corsario, un proyecto de comunicaciones satelitales para helicópteros

Proyecto CORSARIO: El sistema de comunicaciones satelitales que portarán los helicópteros del futuro

Corsario: Un proyecto innovador para la industria aeronáutica

Un ‘Corsario’ en el aire: helicópteros con telecomunicaciones avanzadas

Lanzan Corsario, un proyecto de comunicaciones satelitales para helicópteros

Understanding the Functional Hazard Assessment (FHA) Process in Aviation Safety

In aeronautics, safety engineering is a fundamental part of the systems engineering process, as described in ARP4754B. This makes a lot of sense because for aircraft, safety comes first. So, safety requirements must be correctly identified, derived, and completely verified.

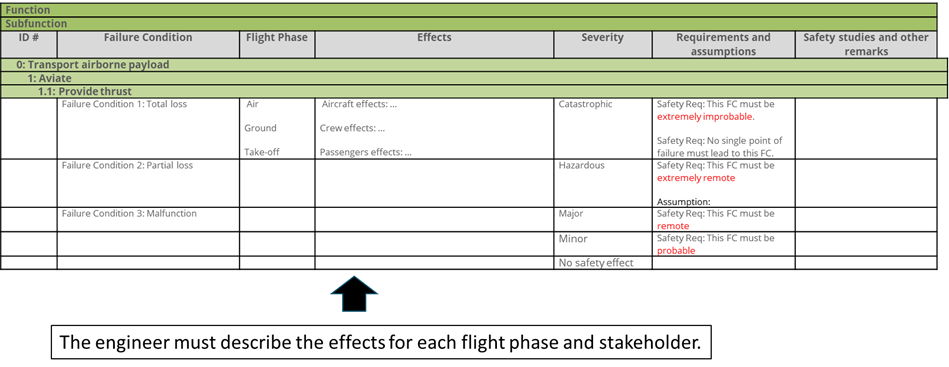

During the FHA -Functional Hazard Assessment is a systems safety process described in ARP-4761A– the potential failures causing total or partial loss of system functions are analysed. Also, the malfunctions are considered, for example, an unintended activation or erroneous outputs. These failure scenarios are called failure conditions, and they must be fully identified.

Then the safety engineer must assess the effects that a failure condition could have on the aircraft, the occupants, and the crew. Besides, not only should they be considered once but also for every flight phase.

Finally, once the failure conditions effects have been described, the safety engineer must classify each one into five categories according to its severity: No Safety Effect, Minor, Major, Hazardous or Catastrophic.

This classification will lead to the safety requirements to be satisfied for every failure condition that has some safety effect on the operation of the system. It could also help identifying design requirements for the system functions, in order to avoid the occurrence of such failures or mitigate its effects.

So, roughly speaking, we take the functional requirements of the system and then we derive new requirements according to the repercussions of the function’s failures. Let’s see an example:

Let’s see an example considering a function “Provide thrust”, which comes from the requirement 1:

| Req 1 | The system must provide thrust |

|---|---|

| Req 1.1 | The system’s failure condition “Insufficient thrust to maintain positive climb rate” occurrence must be extremely improbable. Note: It must be extremely improbable because its severity is Catastrophic. |

| Req 1.2 | The system’s failure condition “Inadvertent high thrust” occurrence must be remote. Note: It must be extremely improbable because its severity is Major. |

.

These requirements are qualitative. To transform them into quantitative we should go to the aeronautical regulations. As per CS25 regulation, when a failure condition is:

| Extremely improbable | it’s not anticipated to occur during operational life of a fleet |

| Extremely remote | it’s not anticipated to occur during operational life of an aircraft |

| Remote | it’s unlikely to occur during operational life of an aircraft |

| Probable | it’s likely to occur during operational life of an aircraft |

Leveraging Artificial Intelligence (AI) for Automated Functional Hazard Assessment (FHA)

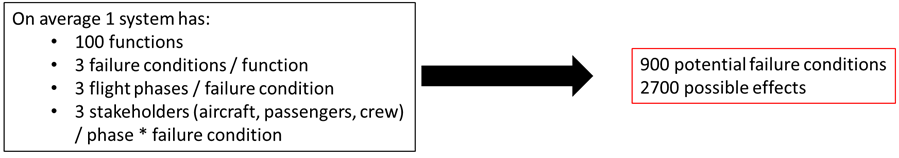

Just imagine a system with 100 functions. Considering total, partial loss and malfunction and three main operational phases, the system could have up to 900 failure conditions whose effects must be assessed for aircraft, occupants, and crew (leading to up to 2700 effects).

It sounds like a lot of work. And it is.

As you can see, it’s a very manual and text-based process so it’ll be a great candidate to the AI automation.

Use case of AI-powered Functional Hazard Assessment generation process

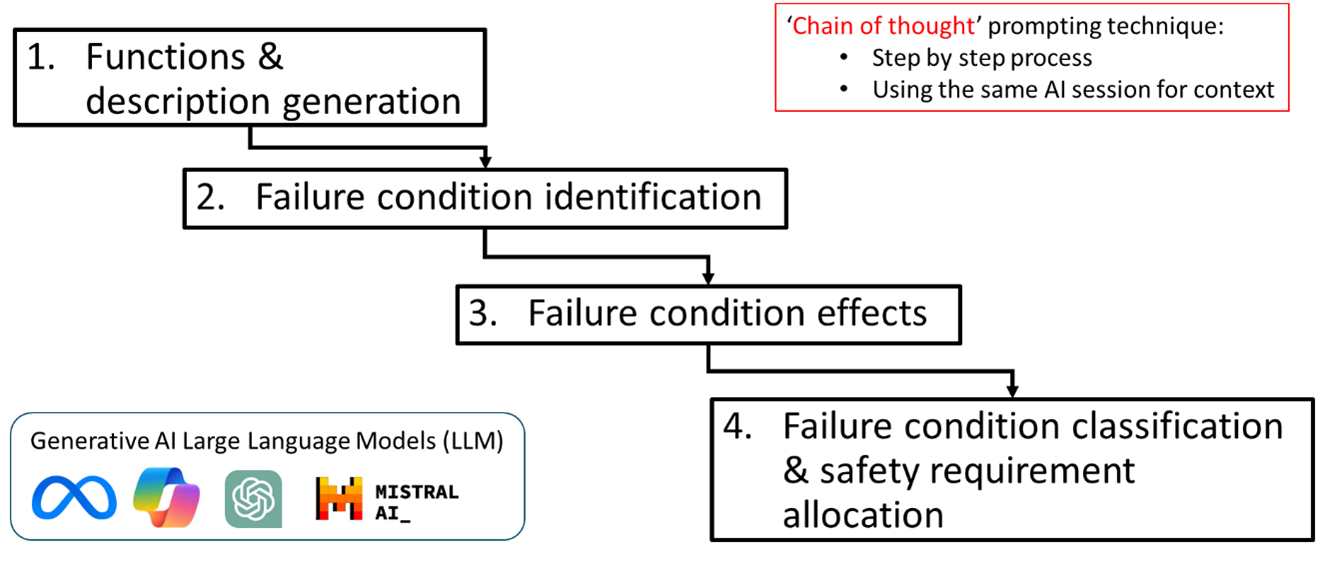

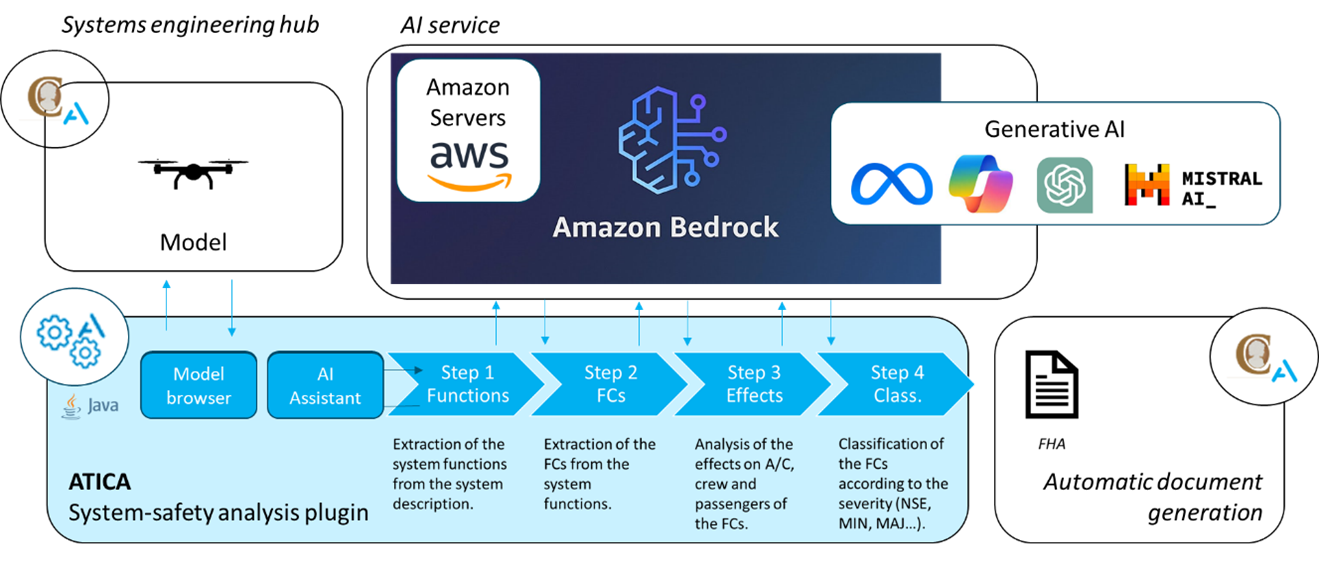

To make an FHA with the AI, four steps are considered:

- Functional description generation.

- Failure condition identification.

- Effects description.

- Classification of failure conditions according to effects.

Text generation is done by means of large language models. Everyone knows ChatGPT, which is probably the most well-known model, but there are many others. We started testing three of them: ChatGPT, Meta’s Llama2 and Microsoft’s Copilot.

During the first part of the project, a chain of thought prompting technique was elaborated. It replicates the engineer’s process to generate the FHA.

Once the process was defined, the prompts of each step were systematically defined until the obtained results were satisfactory.

For that purpose, we analysed hundreds of results from each model. Also, we learnt about the parameters that configure the language model:

.

After several trials and errors, a low temperature was selected for repeatability of results and a lower context than initially expected was used to avoid the lack of diversity in the response.

The final chain of prompts is something like:

- 1. Given a functional description of a system, identify its main functions and describe them.

- 2. Given a description and the name of a function. Provide failure conditions.

- 3. For each failure condition obtained in the step 2. Provide a description of the effects in the aircraft, occupants, and crew.

- 4. Given the description of step 3. Classify failure conditions of step 3 into the safety categories.

.

At first, we were quite sceptical of the results because we didn’t know that models had been trained with aeronautical data.

However, it went better than we expected. Let’s see some of the results.

Functions description

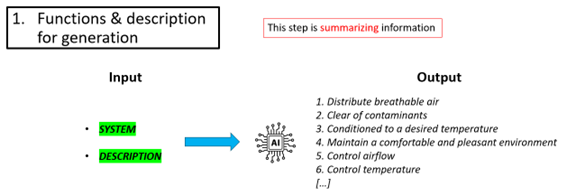

Given the system name and a functional description of the system, AI returns the functions of the system. This step could use a functional requirements specification instead of a functional description. The fact is that AI is quite good doing it because it’s summarizing a given input.

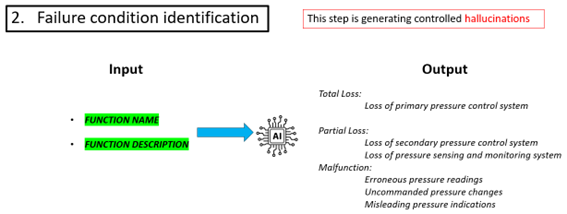

Failure conditions identification

Given the function name and its description, AI returns the failure conditions. In this step we depend on the “creativity” of the AI, I like to call it “controlled hallucinations”. Because in the previous step the AI was summarizing information; but in this step the AI is creating new information, we are just speculating, and the AI is helping us to speculate what could go wrong.

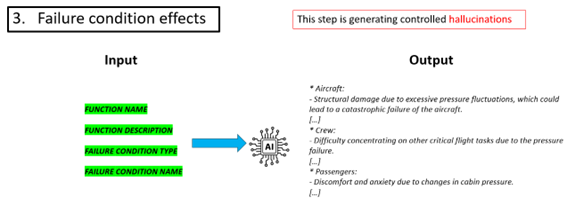

Failure condition effects

Given the function name, the description and a failure condition, AI returns the effects. Here we are relying on “controlled hallucinations” again. However, in this case result are impressive and surprised many of our safety engineers in terms of quality.

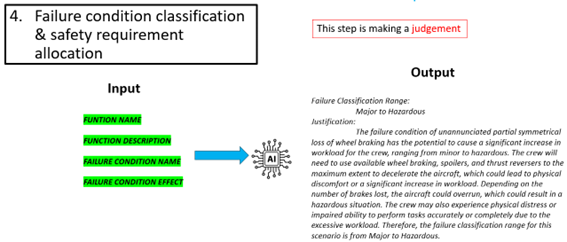

Failure condition classification

Given everything, AI returns a range for failure condition classification. Unfortunately, this is the worst step. This is because we are asking the AI for a judgement, and it’s not the kind of task it is good at doing. In addition, it’s difficult to know why the AI is choosing one or another severity. So, there’s still room to improve it.

.

This evaluation exercise let us find out some interesting metrics about the quality -in terms of usefulness for the engineer- of the generated content. These metrics are quite subjective, but we’ll use it to contrast with future projects.

Usefulness not only evaluates the technical quality of response, but it also evaluates how good the AI-generated template is. Bearing in mind that none of the generated content could directly pass through the safety process without a human real and verifiable action.

| Step | Score out of 10 |

|---|---|

| Generation of functions and/or descriptions of functions | 7 |

| Generation of Failure Conditions | 6 |

| Effects identification | 8 |

| Severity classification | 4 |

.

Nevertheless, there was one important issue to be solved.

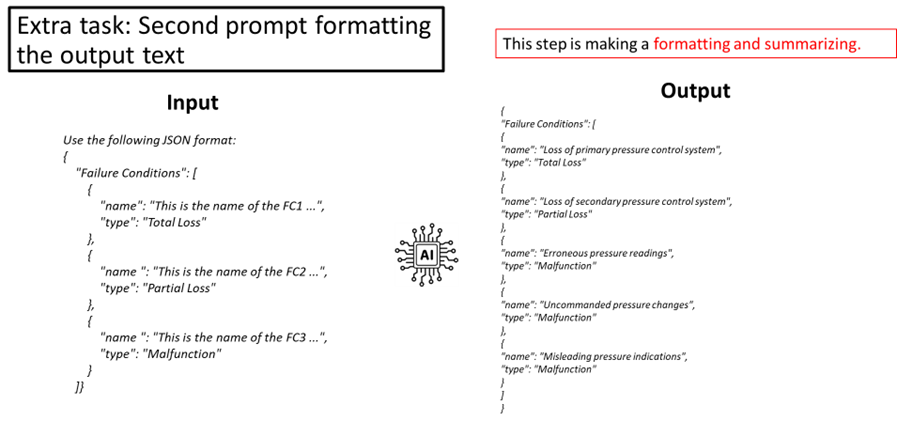

One extra task. Because if the process was to be automated, we would need to integrate the answers into the system model. Otherwise, we’d be copy-pasting all the time.

Formatting the answers

So, we need to force language models’ output to a very specific format. In this case we chose JSON format.

.

There are two important drawbacks that make the AI integration with systems engineering processes a manual and difficult task:

- First, the prompts are made ad-hoc for the FHA. Any other process must be assessed again to produce its own prompts and process.

- In addition to that, we’re concerned that the prompts couldn’t be used in other language models with satisfactory results. We’ll need to reassess again.

Nevertheless, in general terms, we would be able to replicate the same process for many of our safety engineering deliverables providing that we split the problem is small manageable tasks creating a chain of thought and that each step is given the correct inputs!

Deploying Artificial Intelligence for Functional Hazard Assessment in Systems Engineering

So now, we’ve got a suitable language model with proper prompts that give us good results. We’ve been using online playgrounds to tests the process and the next step is to get rid of those playgrounds and use an application to interact with the model.

And here it comes another trade-off.

Full privacy means having the language model locally installed, so only open-source models would be available such Llama2 or Mistral. Even worse is the fact that we’ll need a big purchase of hardware to make those models run in an appropriate time.

On the flip side, we could deploy it as a service on a server such Amazon Web Services or Hugging Face, which are special servers for this kind of applications.

The hardware issue made us to choose the online server option, but we learnt that while using openAI server solution would lock us to their solution, Amazon’s solution offered us a bunch of models to choose from different AI providers.

So, we connected our Model Based Systems Engineering framework (Cameo) application to Amazon’s and started prompting using models’ retrieved data and filling the content we needed automatically for each step.

Here’s the demonstration of the first step made with two functions -mapped in SysML as activities with our own stereotypes-.

And the next video shows the demonstration of step 2 and 3. In this case, not only are we interacting with AI but also making new model elements from the response returned. One important aspect here is to note that the tool is not only returning raw content providing by the AI, but it is also providing structure to this content according to the metamodel description.

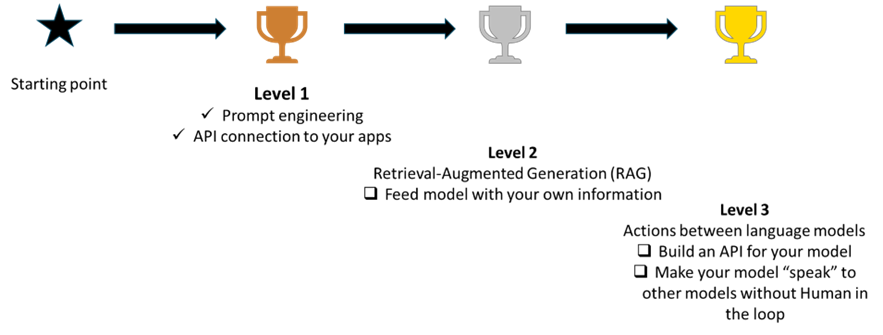

How to master generative Artificial Intelligence with advanced techniques for Systems Engineering

We started this AI journey some months ago and we’ve reached what we call level 1. But there’s still some interesting improvements that will be explored during the next months.

Level 2 includes an enhanced technique named RAG -Retrieval-Augmented Generation-. This technique consists in feeding the language model with your own data. No need to train or fine tune the model.

RAG combines the power of language models to manage the existing information and thus enhancing the context the AI is given and improving the responses with domain-specific concepts. So, for example, AI could search for you some information related to a system you are working on.

One step further is Level 3. Here we’ll have what I call “actions between language models”. This is, building an API for your language model and make it “speak” with other models via “API”. Not only other models but anything with an API.

This is all for now, we’ve seen how Artificial Intelligence can be used to automatically generate a Functional Hazard Analysis. The results have been good but there’s still room for improvement.

Do you want to know more? Keep updated for new applications and techniques of Artificial Intelligence in systems safety engineering or contact us for more information.

| Aerospace Engineer with expertise in avionics, systems engineering and model-based design and analysis. At Anzen, Daniel’s work is focused on ATICA, our Model Based product. Daniel is in charge of building systems models and applying systems engineering processes while using ATICA to improve results on safety and reliability analyses for aerospace avionics projects. |

I am interested in finding out how I might be able to use Anzen’s products for MBSA with a Cameo based MBSE models

Hello Jonathan. Thanks for your comment.

Usually we work MBSA with Capella and that’s why our products are distributed as Capella Plugins.

However, as you can see in this post, we’ve worked with Cameo as well. This was done for a specific project. We created a safety profile with elements quite similar to ARP4761’s artifacts and then we developed a Cameo plugin to connect the model to other MBSA “services”, like the AI assistant described in this post, but it would be the same for our Failure Propagation and Markov solvers already available for Capella.

Please, if you have specific needs around MBSA, let us know!